Our solutions for video analysis in sports generate lots of video files of athletes from different perspectives. This gave one of our working students from the University of Leipzig the idea for his master's thesis: Would it be possible to use the existing data to create a training data set for neural networks so that poses taken can be recognized automatically?

Usually, AIs (artificial intelligences) use images from one or more perspectives for 3D pose extraction; the algorithm recognizes the joints, calculates 3D coordinates from them and can thus determine the pose assumed. Currently, there is still too little training data for the AIs, which leads to inaccurately recognized poses and errors.

For example, a headstand causes problems in the calculation because the orientation of the body is different than learned from training data - head down, legs up. Another example that often confuses the algorithms is the occlusion of body parts. For example, if body parts are obscured by objects (ex. soccer ball, bubbles while swimming, etc.), the AI cannot recognize joints correctly and an incorrectly calculated pose results.

Training data for reliable 3D pose recognition

Therefore, the question was: is it possible to create training datasets from the existing video data to reliably train the algorithms in 3D pose recognition?

Several steps were necessary to create training data from the videos:

- Camera calibration

- Selection of image pairs for pose extraction

- Drawing joint coordinates into images

- Determination of the 3D coordinates of a joint

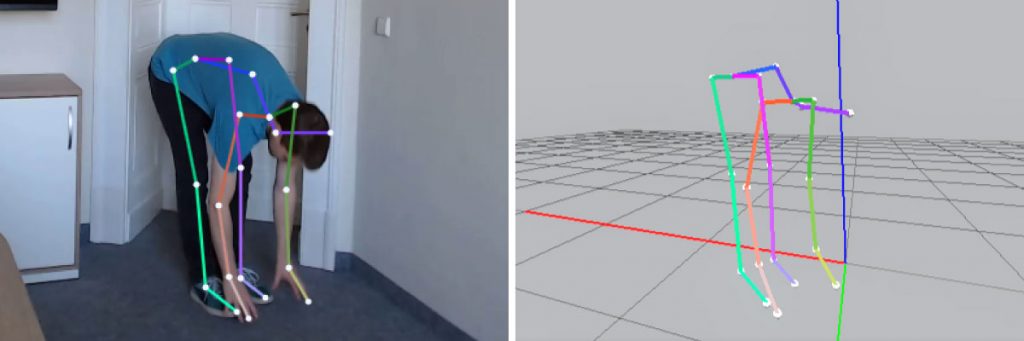

- Rendering of the extracted 3D skeleton for visual analysis

In order to create training data sets, the joints of the athletes were to be marked by hand in the existing videos and an algorithm was to calculate the corresponding joints of the 3D model from this. The problem: The positions of the cameras in relation to each other or to the object were unknown and thus the perspectives could not be set in relation to each other. As a solution, algorithms were used that calculate this relation on the basis of visual axes or defined patterns.

For the pose determination, several videos were played synchronously with a special video player and matching images were determined. In these images, the joints were then marked by hand. This means 21 joints and 19 bones per skeleton. Marking is therefore very time-consuming and also causes deviations, because it is very difficult to determine the exact position of the joints. Once all the joints have been marked, the 3D points are calculated using triangulation.

At the end you get the 3D coordinates of a skeleton from the two videos. These are rendered as stick figures in a 3D model. This data, together with the images, can be used to train AIs. These AIs are then used, for example, in the field of augmented reality or computer vision (e.g. load measurement of joints, gesture recognition).

Results of the development

At the end of his research our student came to the conclusion that with the chosen method the basic pose can be extracted (whether the athlete is sitting, standing, running, etc.), but accurate measurements are not possible this way, because the joint distances were partly calculated incorrectly and the bone length varies this way. The accuracy of the AI can hardly be improved in this way. Optimization approaches could be more perspectives and a better camera calibration.

Initial findings on camera calibration and projection calculation from this master's thesis have already been used in ski jumping - as a control of position trackers of ski jumpers. The video player component developed alongside the master thesis was also incorporated into our multicamera system utilius kiwano.