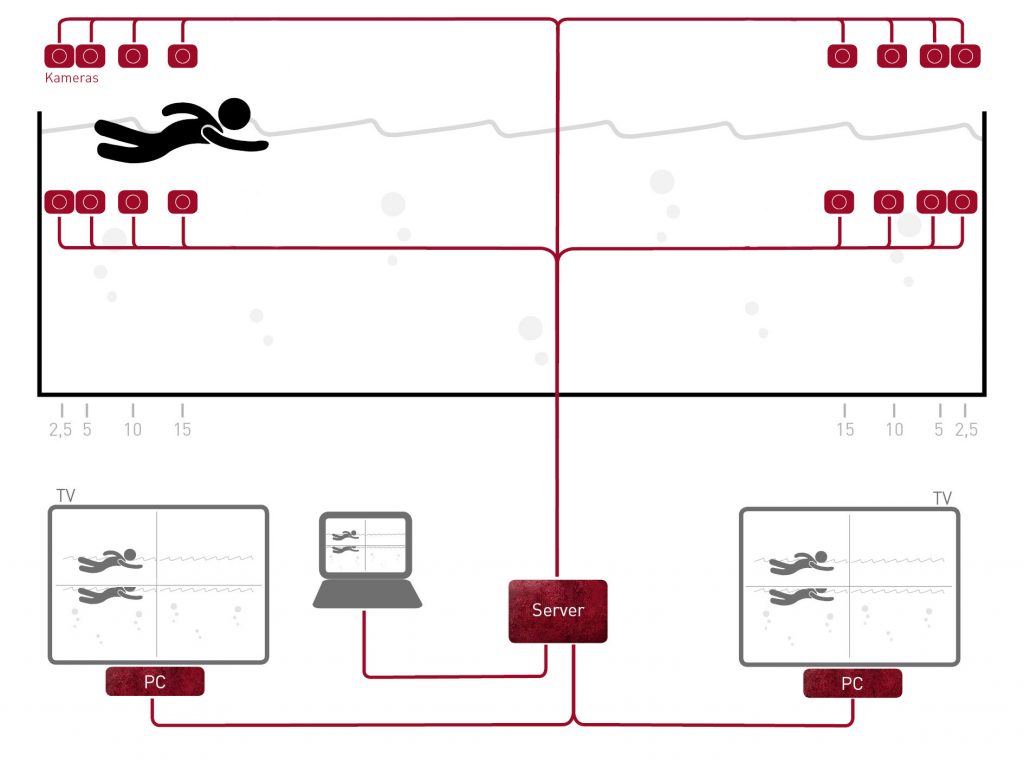

In video analysis in swimming, athletes are usually recorded by multiple cameras and thus from multiple perspectives. For example, the multicamera system can be set up in such a way that there are several cameras installed on the side - at intervals of 0m (starting block), 2.5m, 5m, 10m and 15m both underwater and above water. This way the coach and athlete have 5 individual shots available after training.

Das Problem dabei: Für die Auswertung müssen diese 5 getrennten Aufnahmen analysiert werden, während der Sportler schnell durch die einzelnen Videos “hindurchschwimmt”, er befindet sich also immer nur für kurze Zeit im Bild.

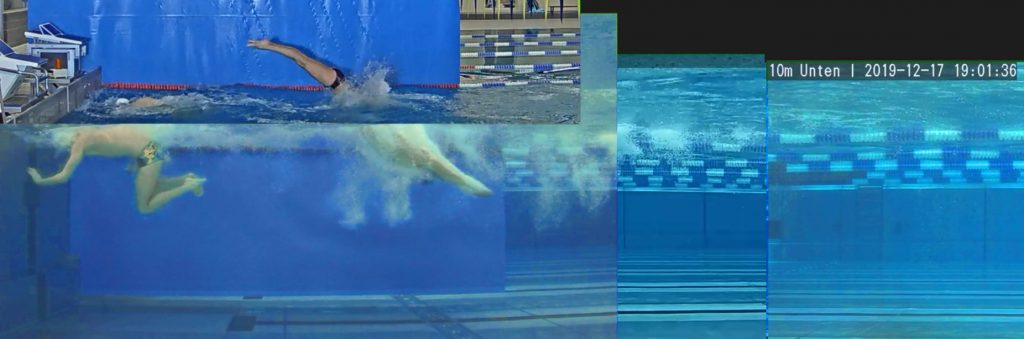

An automatic camera merge is intended to remedy this situation. The various camera views are combined in a single shot so that the athlete can be seen swimming through the different images at a glance. The result is a fluid video that shows the complete immersion phase of the athlete.

Für diesen Zusammenschnitt muss für eine bestimmte Kameraeinstellung die entsprechende Konfiguration vorgenommen werden. Dabei werden die Schnittstellen der Bildausschnitte “per Hand” zusammengelegt, ähnlich wie bei einem Puzzle. Dann werden die Pixelkoordinaten der linken oberen Kanten berechnet.

After that, a specially developed algorithm and the libraries of the FFmpeg Foundation are used. The coordinates are used to merge the videos appropriately. In this way, a whole is created from several videos.

Der Zusammenschnitt hat jedoch auch einen Nachteil: So entsteht jeweils an den Schnittstellen der Bilder ein “Knick” in der Aufnahme, verursacht durch die perspektivische Verzerrung. Je weiter der Sportler von der Kamera entfernt ist, desto größer wird der Versatz.

To keep the offset as small as possible, the pixel coordinates for each path should be specified. This way, the merge can be optimized accordingly. Before recording, the mode must then be selected so that the videos can be laid end to end accordingly.